We’ve all got them.

Biases abound in our world for a variety of reasons.

But here’s what is not inevitable: Acting on them and allowing them to damage your organization’s good work.

Failing to address different forms of bias within your organization has real negative consequences. The Center for Talent Innovation found that real or perceived bias causes employees at large companies to disengage at a rate three times higher than at workplaces where bias is effectively managed.

Pile on top of that the finding from Gallup that employee disengagement costs U.S. companies up to $550 billion per year and you quickly see the scale of the problem that bias can cause for organizations. Bias has also been shown to negatively impact employee retention and innovation.

Luckily, we live in an age when the conversation around biases (and how to address them) is quite active and fluid. Leaders and organizations now have a great number of tools and resources to educate themselves on the nature of biases as well as how to tackle them.

The first step, as always, is education.

We know that cognitive biases are essentially errors in thinking that come up in an individual’s line of reasoning that allow decisions to become flawed as a result of various personal beliefs.

At the same time, it’s important to remember that cognitive biases are not all bad. They’re essentially shortcuts (often called “heuristics”) that help our brains process the massive amounts of information around us and assist us with making quick and effective decisions.

The trouble arises when these shortcuts (and their resulting flaws) limit our creativity and impede innovative thinking. Or when they discourage diversity and inclusion. Since our biases rely on past experiences and ways of applying knowledge, those who have had success using that knowledge can find it hard to think differently. Our decisions are guided by these instincts and very sensible short-cuts.

The goal is to keep those biases in check so that you’re addressing the right problems with the appropriate lines of thinking and not repeating similar patterns on multiple projects. Modern organizations must have strategies in place to identify cognitive biases that impede creative thinking, challenge them, and establish better decision-making processes that deliver innovative outcomes.

Let’s dig into how to get that big job done.

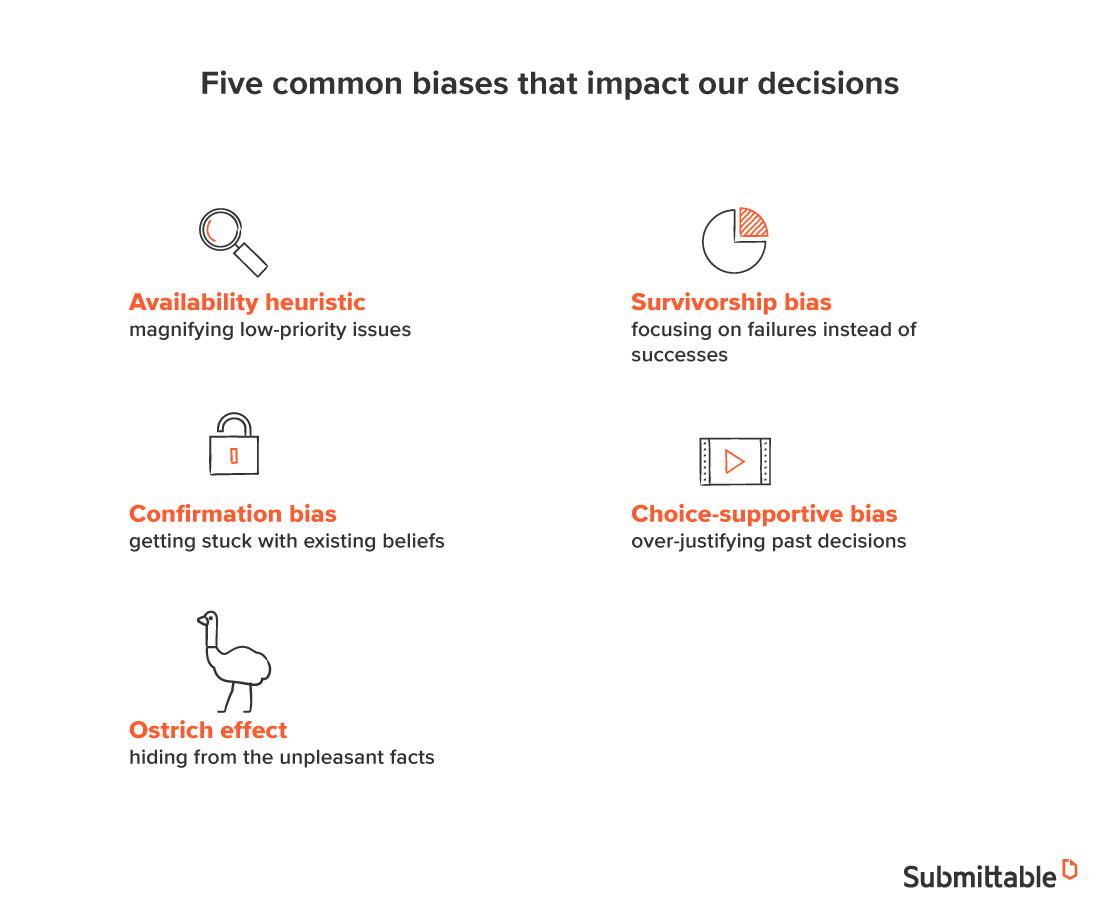

Many types of biases can affect our decisions

There are just so many types of bias out there that it can get exhausting trying to keep track of them all. Especially when you’re considering how they might play out in complex organizational processes.

Here’s a non-exhaustive, yet comprehensive list of some of the top forms of bias to look out for within your organization and some suggestions on how to address them:

1. Availability heuristic

When we assume the general probability of certain events happening based on anecdotal evidence of recent occurrences, the availability heuristic comes into play.

This happens a lot with things like crime. It’s a shortcut to help us save time as we strive to determine risk. Such thinking can lead to inaccurate estimates and poor decisions. For example, an employee might predict how a whole market of customers will respond to a new product launch based on a few initial purchases without really digging into the data.

Catching that type of biased thinking early can be critical to organizational stability.

2. Survivorship bias

When people or things pass through some form of selection process, we tend to focus on them while ignoring other crucial factors.

This explains why people tend to believe that older cars are more durable than newer ones, despite the fact that such claims are factually inaccurate. Survivorship bias makes it too easy to match patterns and confuse correlation with causation. Such cloudy judgment prevents us from digging into root causes of challenges we’re facing.

For organizations, survivorship bias could play out when team members cling too tightly to existing product lines with steady sales rather than opening up avenues for innovation and product development for the company to grow its revenue.

Tackling survivorship bias means digging into the details and clarifying the aspects of products and services that have allowed them to endure while ensuring that those qualities infuse other offerings to customers.

3. Confirmation bias

People like to be right.

Confirmation bias shows us how people tend to ignore information that is contrary to their pre-existing thoughts. Especially in today’s digital age, we’re awash in channels and data that can easily back up our own ideas while keeping us siloed off from countervailing facts.

While it’s easy to seek out knowledge that just verifies our current thinking, stepping out of confirmation bias is important when it comes to building stronger rationales for organizational decisions.

The most common example of our age might be political discourse and how it plays out on social media. People are constantly cherry-picking information and seeking out data that confirms their perspectives.

In terms of organizations, it’s easy for business leaders to look at market intelligence that backs up the positions they’re taking on strategic matters. As such, it’s important to ask leaders of companies to directly confront information that counters organizational strategy so that those efforts can be honed to address market realities.

4. Choice-supportive bias

This form of bias is a close relative of confirmation bias, yet slightly different.

Remember that shirt you bought the other day? The one you knew you should return but talked yourself out of it?

That’s choice-supportive bias—the tendency to give positive attributes to choices that have been made in the past or to forgo other options as a result. When we downplay the disadvantages of certain choices we’ve made, we’re engaging in this form of bias.

5. Ostrich effect

Attempts to avoid negative information constitute the Ostrich effect.

This happens a lot in the financial industry. Many investors will make efforts to avoid any negative information regarding their investments.

Though it’s poorly-named (as ostriches don’t actually stick their heads into the sand to avoid danger), this bias does explain why people avoid checking their bank and credit card statements so as not to absorb the shock of how much they might be spending.

This bias can leave you unaware of important challenges and dangers that may arise from smaller bits of negative information. Don’t be the ostrich in this case.

Other forms of bias to look out for

In addition to the five major types of bias, that’s not where this list ends. Here are other types of bias that can keep your team from doing what’s smart and what’s right.

6. Action bias

Don’t let your team feel like they must take action without at least some analysis.

The desire to jump into doing something when there’s ambiguity is the purest form of action bias. It can be incredibly counterproductive when those knee-jerk actions aren’t pushing towards set goals.

When teams are under time pressure, action bias becomes an even bigger issue. Encouraging employees to engage in a little analysis before taking action can keep your team on the right track over the long-term.

7. Authority bias

Titles don’t equate wisdom.

When we favor the opinions of authority figures over other ideas, we’re engaging in authority bias. Even when those other ideas are more creative and better for problem-solving, people have the tendency to lean towards the ideas of senior leaders.

Having a decision-making framework to vet all ideas, regardless of where they originate, can help to mitigate this form of bias.

8. Overconfidence bias

A false sense of one’s skills or talents is a form of overconfidence bias.

This bias can lead people to maintain the illusion of control over certain situations or to believe that time is on their side when it comes to completing specific work. Overconfidence bias can also lead to the desirability effect in which people believe something will occur simply because they want it to.

9. False causality bias

To assume that sequential events are caused one by the other is the essence of false causality bias.

This bias often shows up in design thinking exercises in which practitioners are looking to confirm a causal relationship between what users are saying and what they are doing. This can lead teams to come up with inaccurate problem definitions and therefore inaccurate solutions.

10. Self-serving bias

When something wonderful happens, it’s because we are brilliant and talented.

When something bad happens, it’s just rotten luck.

That’s the essence of self-serving cognitive bias, the tendency to attribute the cause of a thing to that which is in our best interest. This bias can lead to a serious lack of critical analysis and can damage an individual’s ability to accurately assess a situation.

11. Herd mentality

Also known as bandwagon bias, herd mentality pushes us to favor ideas already adopted by others.

You see this bias play out in voting and politics, when those who vote later are persuaded to also support the candidates perceived as being “winners.” This is an emotional influence that is often devoid of rigorous analysis.

This type of bias is easily linked to authority bias (i.e. people jumping on board en masse to support an authority figure) and is common in workshop settings. When leading teams, keep an eye on the speed at which certain concepts are adopted by the group to ensure this form of bias isn’t playing a part.

12. Framing cognitive bias

We all think in frames. They shape how we see and operate in the world.

But when individuals make decisions based on how information is presented rather than the facts themselves, framing bias becomes an issue.

Individuals tend to come to different conclusions depending on the way facts are presented, which can lead to incorrect analyses of problems and therefore, wanting solutions.

Rooting out framing bias in organizations involves training employees to practice care in how they present problems and potential solutions. For example, if an employee suggests that “We’ve got too many widgets in stock and they’re not selling fast enough, so we should reduce supply to save money”–they’re framing the issue strongly and even pre-determining a solution for the team.

In that example, an organization would need to look at relative supply levels over time, what’s going on with sales, and even look at production rates to better understand the problem.

Don’t let those frames confuse you!

13. Projection bias

When we over-predict future preferences matching current tastes, this is projection bias.

This bias has a particular impact on innovation work as new products are thought up and projected into the future. These products can be over-valued in the market and not necessarily reflective of consumer preferences.

Working with teams to take a more measured and analytical approach to projecting future trends helps to reduce this bias.

14. Narrative fallacy

People love stories. We find them easier to relate to.

This happens all the time in politics. The candidate with the better stories, regardless of the facts, often wins. People can be prone to even choosing less desirable outcomes when they’ve got a great story attached to them.

This bias is somewhat similar to framing bias in that the “window dressing” around the actual facts tends to win out.

15. Strategic misrepresentation

Play down the costs. Overstate the benefits.

That’s strategic misrepresentation.

Projects like business models and innovative concepts often come with those pitching them downplaying the true costs and over-emphasizing the benefits in order to receive approval for the project.

Managers should have processes in place to accurately assess ideas to see beyond overly-optimistic projections.

16. Anchoring bias

You might catch your parents being guilty of this form of bias.

They were used to cheaper prices back in the day, and now they think that everything is too expensive (my father does this all the time).

Anchoring bias refers to the tendency to use old existing data as a fixed reference point that skews our decision-making. It’s important to remind teams to avoid getting fixated on past data and to consider all information available when making decisions.

17. Hindsight bias

This is the “I told you so” bias.

When people correctly predict the outcome of an event, they tend to believe that they always knew the right answer, despite that rarely being the case.

We all like to look back and think of ourselves as the “one who got it right” in the moment. This bias is linked to self-serving cognitive bias as we strive to verify our own ways of thinking and processing.

18. Representativeness heuristic

When people think that two similar objects are also correlated with one another, they’re engaging in the representativeness heuristic.

This is a helpful shortcut for our hunter-gatherer brains in terms of sniffing out danger and categorizing natural phenomena to protect ourselves. But in our complex modern world, this form of bias can impede our ability to accurately analyze circumstances and act appropriately.

For example, one organization might see a competitor changing their service offerings to something quite similar to the first organization’s core service and assume that the competitor is looking to move into new territory and compete further. The reality might be that the competitor is desperate for new revenue for various reasons and willing to entertain a merger discussion.

As you can see, solving the representativeness heuristic involves digging deeper into contextual understanding of events and not relying on knee-jerk assumptions and categorizations.

19. Status-quo bias

Many of us fear change. We’re creatures of habit.

So goes the status-quo bias.

We fear loss (loss aversion bias) and tend to revert to the way things are as a result. This bias operates on an emotional level and makes us reduce risk and prefer what is familiar.

Such bias can have significant negative impacts on organizational functions such as hiring and product development. When looking for a creative solution to certain problems, watch out for this bias operating within the team.

20. Pro-innovation bias

On the flip side, not all innovation is inherently good.

When people insist that new innovations should be widely accepted, regardless of the consequences, this is pro-innovation bias. Such bias can lead to damage to the environment, economic inequality, and other negative impacts.

Ask teams not to judge new ideas and concepts through a pro-innovation filter and to look critically at all of the effects of new concepts.

21. Conformity bias

People like to fit in.

Hence, conformity bias.

The choices of the masses influence how we think and process information, even when it goes against specific individual judgments we’ve made. Such bias results in bad decisions and can lead to groupthink which is a creativity killer.

If you see the team suppressing outside opinions and individuals censoring themselves, take swift action to address this critical loss of independent thinking.

22. Feature positive effect

When our time and resources are limited, we tend to over-emphasize the positive benefits and disregard the negative consequences of actions.

Closely linked to optimism bias and strategic misrepresentation, feature positive effect bias can be influential when a team is taking a deep dive into a specific set of new features for a new product or service. It can lead to teams overlooking key information and taking specific ideas forward with addressing specific flaws.

23. Ambiguity bias

People tend not to like the unknown.

That’s where ambiguity bias comes in. When the outcome is unclear, people tend to favor options that are more predictable over those that are less apparent.

Ambiguity bias can seriously inhibit innovation as it causes teams to favor more well-trodden paths rather than take creative risks that could new discoveries.

24. Loss aversion

The only thing people dislike more than the unknown is losing their stuff.

That’s where loss aversion plays a key role. When teams and individuals fear losing what they have more than they’re willing to risk efforts to gain more, such loss aversion can impede innovative thinking and can prevent growth.

Help your teams understand the bigger picture (of gains and losses) in order to set the stage for appropriate risk-taking.

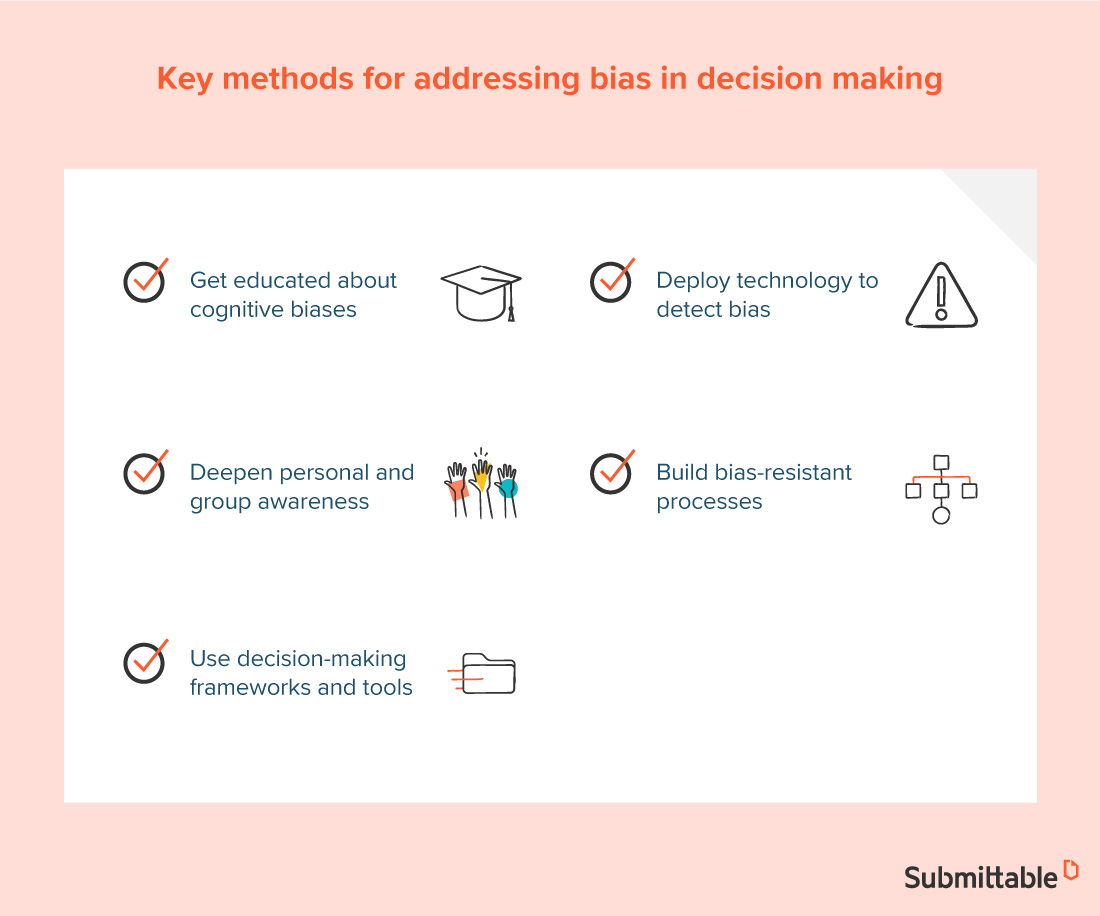

How to root out bias from our decision-making processes

Armed with significant education about cognitive biases (see above), there’s a great deal to be done to address them within organizations.

One important step involves increasing personal and group awareness of the biases that exist among teams. Leveraging resources such as the Implicit Association Test and literature such as White Fragility can really help to expand your team’s understanding of personal biases.

Employing specific decision-making frameworks and tools can help teams step through processes that are built to reduce bias. Such frameworks force employees to understand and check their biases by focusing them on a specific methodology for coming to a decision, rather than relying on ingrained precedents.

Technology can also help to sniff out and address bias. Tools such as AI-powered language detectors and KPI measurement tools can help teams determine when specific language is slanted against specific groups or when employees aren’t being assigned the adequate amount and quality of tasks due to unconscious bias.

Taken together, deeper education about biases coupled with technological tools and well-designed decision-making frameworks can help organizations build bias-resistant processes that significantly reduce bias in the workplace.

Pulling your team’s work into a centralized social impact platform can help to enhance your organization’s approach to rooting out bias. Building a bias-free organization isn’t simple, but what makes it simpler is giving your team modern software to manage information and tackle bias in a single, unified place.

Now get out there and rid your organization of those pesky biases!